For me, it all started with a simple but stubborn question:

—How can I make my AWS Lambdas even faster?

And you could say:

—But Alejandro, they’re already fast. Response times are under a second.

Yeah, I know. But I want more. I want the user experience to feel magically instant, where interactions apply themselves as if by magic.

I use AWS Lambda regularly, and fine, I’ve made peace with cold starts. A small group of users will pay the price of being serverless. But I don’t want secondary tasks to cost users extra latency. That trade-off doesn’t sit well with me.

So… what if you could run tasks like event dispatching or garbage collection after responding to the user? What if you could give your Lambda a second life after the return?

The Problem

In AWS Lambda, once your handler returns, the runtime may freeze or terminate! Threads, async operations, and any remaining work are not guaranteed to complete. But what if you need to do something right after returning the response? Things like:

-

Sending metrics or events.

-

Manually running Python’s Garbage Collector (GC) to prevent memory bloat in warm containers.

We tried starting a background thread in the handler, but it didn’t work. Lambda’s runtime shuts down immediately after the handler returns, regardless of background threads.

Internal Lambda Extensions

According to AWS’s documentation, if you run a background thread inside a Lambda extension, it can continue processing even after the handler finishes. The trick is to:

-

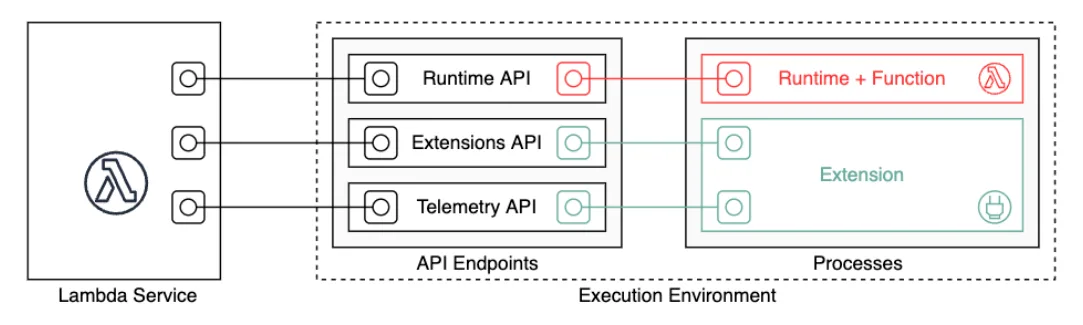

Register the internal extension (Figure 1).

-

Delay the

/event/nextcall until your async task is done. -

Use a shared queue between the handler and the extension thread.

Figure 1. The Extensions API and the Telemetry API connect Lambda and external extensions.

How AWS Lambda extensions work

To understand how internal extensions delay the “end” of the Lambda invocation,

it’s helpful to break down what actually happens around the /extension/event/next API.

Each call to this endpoint signals that your extension is ready for a new event, and indirectly, that it’s done with the current one. Here’s how that plays out:

| Step | You do… | Lambda thinks… |

|---|---|---|

| 1 | POST /extension/register | “OK, you’re in the loop.” |

| 2 | GET /extension/event/next | “Here’s a new Invoke event for you.” |

| 3 | Run async task after handler ends | “Still working… waiting for the extension.” |

| 4 | Return from GET /extension/event/next | “Got it. Invocation complete.” |

Holding and eventually returning from /extension/event/next signals completion to the Lambda runtime.

As long as you hold that call, your internal extension is still considered active, and the

environment won’t be frozen or shut down.

The Design

Knowing this, we implemented an internal extension using Python threads and a queue.Queue.

-

The extension registers with

/extension/registerand waits for/event/next. -

The lambda handler runs.

-

A task is added to the queue just before the lambda returns.

-

Once the async task finishes, the extension completes the current invocation by returning from the polling call to

/extension/event/next.

This delays freezing the Lambda environment until we’ve done the cleanup.

The Code

# Standard Library

import logging

import os

import queue

import threading

# Dependencies

import requests

logger = logging.getLogger(__name__)

LAMBDA_EXTENSION_NAME = "AsyncProcessor"

# Queue where the function handler submits async tasks

async_tasks_queue = queue.Queue()

def start_async_processor():

"""Start the internal extension that handles async tasks."""

runtime_api = os.environ.get("AWS_LAMBDA_RUNTIME_API")

if not runtime_api:

logger.warning("[%s] Not running inside Lambda, skipping extension registration", LAMBDA_EXTENSION_NAME)

return

logger.debug("[%s] Registering extension", LAMBDA_EXTENSION_NAME)

response = requests.post(

url=f"http://{runtime_api}/2020-01-01/extension/register",

json={"events": ["INVOKE"]},

headers={"Lambda-Extension-Name": LAMBDA_EXTENSION_NAME},

)

ext_id = response.headers["Lambda-Extension-Identifier"]

logger.debug("[%s] Registered with ID: %s", LAMBDA_EXTENSION_NAME, ext_id)

def extension_loop():

"""Internal thread that handles task processing and signaling Lambda."""

while True:

try:

# First: Wait for an invocation

logger.debug("[%s] Waiting for invocation...", LAMBDA_EXTENSION_NAME)

requests.get(

url=f"http://{runtime_api}/2020-01-01/extension/event/next",

headers={"Lambda-Extension-Identifier": ext_id},

timeout=None,

)

# Then: Process the async task

logger.debug("[%s] Woke up, checking for async task", LAMBDA_EXTENSION_NAME)

async_task, args = async_tasks_queue.get(timeout=8) # Lambda timeout is 10s

if async_task is not None:

logger.debug("[%s] Running async task", LAMBDA_EXTENSION_NAME)

async_task(*args)

logger.debug("[%s] Async task completed", LAMBDA_EXTENSION_NAME)

# Finally: Only now call /event/next again for the next invocation

except queue.Empty:

logger.warning("[%s] No async task found after invocation", LAMBDA_EXTENSION_NAME)

except Exception as e:

logger.exception("[%s] Extension thread failed: %s", LAMBDA_EXTENSION_NAME, e)

# Start extension thread

threading.Thread(target=extension_loop, daemon=True, name="AsyncProcessorExtension").start()

def submit_async_task(task_func, args=()):

"""Submit a task to be executed after the handler returns."""

async_tasks_queue.put((task_func, args))

# Start extension immediately at import

start_async_processor()Usage in the Handler

import json

import logging

import gc

from http import HTTPStatus

from extensions import async_processor # Internal extension module

logger = logging.getLogger(__name__)

def async_postprocessing(request, response, trace_context):

# Optional: re-attach trace context here

try:

# Simulate sending an event or doing heavy post-processing

logger.info("Async task: sending event after response")

except Exception as e:

logger.exception("Error in async task: %s", e)

finally:

gc.collect()

def lambda_handler(event, context):

gc.disable() # Disable GC for performance

try:

body = json.loads(event["body"])

# Simulate processing logic

response = {"message": "Success", "input": body}

return {

"statusCode": HTTPStatus.OK,

"body": json.dumps(response),

}

except Exception as e:

logger.exception("Error in Lambda handler: %s", e)

return {

"statusCode": HTTPStatus.INTERNAL_SERVER_ERROR,

"body": json.dumps({"message": "Internal error"}),

}

finally:

async_processor.submit_async_task(

async_postprocessing, (event, response, None)

)The Outcome

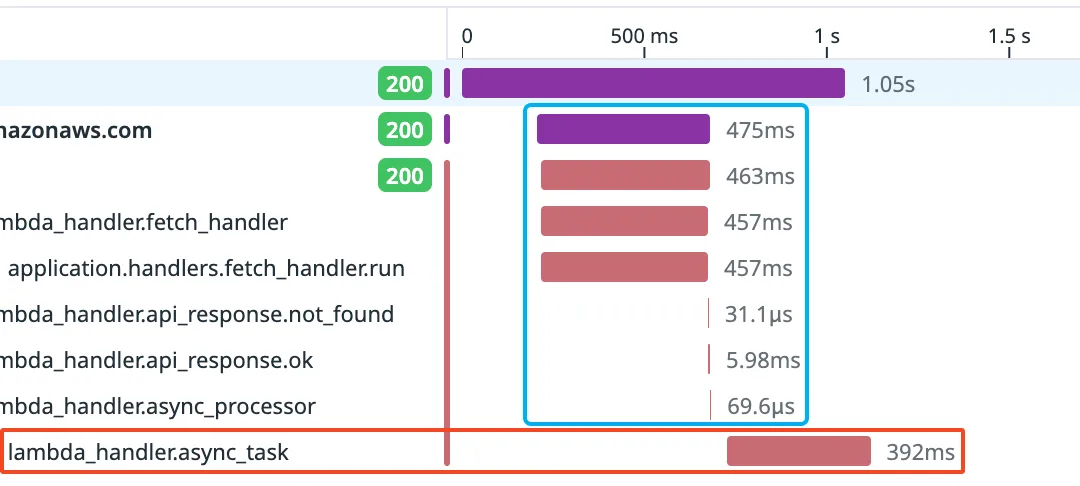

It was possible to separate the lambda execution. Event dispatch and GC collect happen after the response returns (Figure 2). It’s fully internal, deploys with our Lambda, and doesn’t rely on any external function or destination.

Figure 2. Lambda execution of the extension after the return. Lambda function in Blue, extension in Red.

When to Use This

This pattern is ideal if:

-

You need post-processing after returning a response.

-

You want to stay within one Lambda artifact.

-

You’re okay with the complexity of writing an internal extension.

-

The postprocessing execution is short enough, so it does not reach the Lambda timeout.

Avoid it if you need retries or fault tolerance, in that case, consider invoking a second async Lambda instead. Also, keep in mind that if your Lambda times out, this postprocessing will still be aborted.

References

-

Running code after returning a response from an AWS Lambda function

AWS’s official example of how to decouple background work from user-facing latency using internals from Lambda. -

AWS Lambda Extensions API

Documentation on how to build and register custom extensions that can observe lifecycle events and run logic outside the main handler. -

Python

queue.Queue

Thread-safe queue implementation used to buffer post-response tasks between the Lambda handler and background thread.